Balancing innovation and oversight – regulating the AI revolution

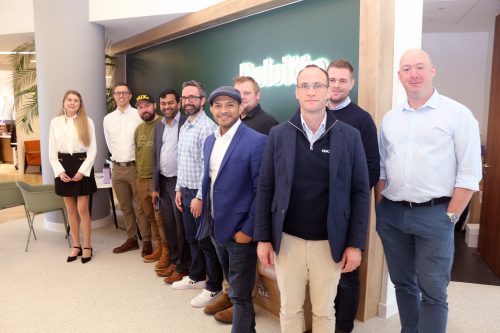

Experts at our AI round table broadly agreed that regulation of the sector was needed – but highlighted challenges facing regulators and the potential for regulation to do more harm than good.

The round table was hosted and sponsored by Deloitte.

Sherin Matthew, founder of AI Tech UK, has long been a proponent of regulating the use of AI. He’s developed Smart Ethics, an open ethics management platform to help firms integrate regulations and ethical considerations to manage disruption and certify innovation.

“What we’re seeing today, thanks to the impact of generative AI like ChatGPT, is the imminent need for intellectual security.

“There is huge concern. I can see there is a common theme around this round table of using Ai for augmentation, but what happens when someone uses it without a certain level of intellectual authority?

“We all drive cars. We have DVLA-approved driving licences. If tomorrow there are driverless cars, who will have the authority? From an AI perspective there are huge challenges – first and foremost, not everyone is aware of what’s happening underneath the hood. There’s a clear lack of awareness.

“Even experts are struggling to understand the depth of impact of risk. Government policymakers are reacting to experts telling them, but again there’s a huge depth of complexity here. Every country, every institute has its own framework, which means there’s a proliferation of hundreds of frameworks, which creates more confusion and chaos.”

Kane Simms, founder of customer experience firm Vux World, said, “One of the challenges is that most firms are using the output of a model that’s been created. The legislation, as and when it comes, really applies to the people and companies building the models – which, now, there’s only about five of these companies.

“The challenge is that the vast majority of the world – apart from governments, who have some sway over these entities – have very little control. You can follow whatever best practice you can, once you have hold of a model, and you can fine tune it. But the underlying data that sits underneath is not something anyone can do anything about.”

Adam Roney, who practised as a lawyer before building digital transformation agency Calls9, said that although he was generally opposed to the regulation of ideas, he recognised that regulation brought certainty.

“This is a tricky space. If you follow the lifecycle of the event, which bit do you regulate? I do think that as an end user you have a responsibility to understand what you’re consuming. I’m not sure the tooling really exists for that at the moment. I think there’s a whole ecosystem that needs to develop to that when a piece of comms comes to you. You get to understand whether a human produced it or an AI produced it.”

Eric Applewhite, director of analytics at Deloitte, said, “Regulation exists because we’re trying to choose between value conflicts and society, which are always important. It’s important when we use AI, if it’s going to be used to make life or death decisions, that it’s regulated as a medical device. It is important that when our data is used, that in a western society there are certain guardrails and safeguards around how our data is used. It is important that when I’m creating value, and investing to create that value, that my IP is protected, and I can continue to go forward with my business without any Tom, Dick or Harry just being able to raid what I’ve done and generate their own value.

“So I’m a fan of regulation, because it needs to do all of that. Having said that, I’ve personally seen examples where the heaviness of regulation and inexperience with the burden of regulation, actually stifles the ability to generate value. Your plane sits on the runway, it never gets to take off, and the money is wasted.”

This is the second of two reports from the AI round table. The first looked at using AI to augment skills and efficiencies in business.